Distributed electricity generation? Groundhog day, again.

- Geoff Russell

- Sep 20, 2022

- 9 min read

[This post is a short holiday from the serious series on nuclear costs.]

Over the past decade or so I’ve heard numerous people extol the virtues of distributed energy systems. Here’s one (typical) set of claims from a factsheet from the United Nations Economic and Social Commission for Asia and the Pacific (UNESCAP):

“A decentralized energy system allows for more optimal use of renewable energy as well as combined heat and power, reduces fossil fuel use and increases eco-efficiency. … End users are spread across a region, so sourcing energy generation in a similar decentralized manner can reduce the transmission and distribution inefficiencies and related economic and environmental costs.”

All very interesting claims.

The Australian Energy Market Operator (AEMO) Integrated System Plan (ISP) is planning on some 10,000 km of additional transmission to connect renewables to the people who need their electricity. This is happening every where we have renewables and is entirely the opposite of the UN agency’s claim.

I’ve no idea what “eco-efficiency” is, but another UN organisation, the United Nations Economic Commission for Europe (UNECE), has scored nuclear power, the typical poster child for large centralised power stations (even SMRs, small modular reactors, designed to be less of a financial burden during construction, are large by comparison with renewable systems) as having the lowest life-cycle environmental impact of any energy source. I’m also mystified about “combined heat and power” – this can be from burning biomass (forests), but can’t come from wind or solar.

This post will consider the merits of sharing electricity generation from large, centralised generators as contrasted with the most extreme distribution – solar panels and batteries powering a large percentage of houses; possibly hooked up as micro-grids. But I want to start with a little history, because claims of the wonders of distributed systems have a very long history. I could have chosen motor vehicles as an illustrative example, and pointed to the ways this distributed transportation mode has throttled efficient public transport, but decided on something that will be less familiar and, hopefully, more entertaining.

A little mostly forgotten history

My first real job was operating a mainframe computer. This was in Adelaide in 1976. The computer was in its own air conditioned room. The rest of the building had no air conditioning, so in summer, other staff would find lame excuses to hang around in the computer room! For any readers in Adelaide, the building is the old MTT headquarters, the Goodman Building. It’s a heritage listed building with stunning western red cedar interior woodwork and glistening porcelain urinals. That first computer had 256k of ram and a glorious multifunction punch card reader. No, you didn’t just misread, 256 kilobytes was a big deal in 1976.

A computer program in those days was a deck of cards with holes punched in them. Each card was divided into a matrix of 10 rows and 80 columns. The pattern of holes in each column of the card represented a character – mostly letters or numbers. There were 80 columns in the card. So each line of program code was one card of 80 characters.

Punch card

Data was also stored in cards; huge trays of them. The multifunction card reader MFCR had two hoppers. Usually, input to your program was in one hopper and any output would either be printed or punched into cards in the second hopper.

I vividly remember my first major computer blunder. Mistakenly programming the MFCR to punch into cards in the wrong hopper. Think about it!

The second computer in my life was much bigger. In addition to punch cards, it had huge cabinets containing hard disk drives. Each drive was a set of large pizza sized disks. We had a row of four such cabinets. I did some googling to check, because my memory of the price was in the region of $100,000 each. How could that be? For just 40 megabytes per drive. There is a marvellous Wikipedia page about these disk drives, and my memory seems about right.

That second machine wouldn’t fit in that relatively small room in the Goodman building and we moved to a purpose-built room in the building that later became the Adelaide Casino, over the railway station. Also a grand old building with plenty of wooden staircases.

When I started a maths degree at Adelaide University, everybody in the department shared a single CDC mainframe and used it for computer-based assignments. Again, we’d type onto cards and deposit the cards in a tray. Operators would run them and place the results, printed on paper, in an output tray which we’d collect.

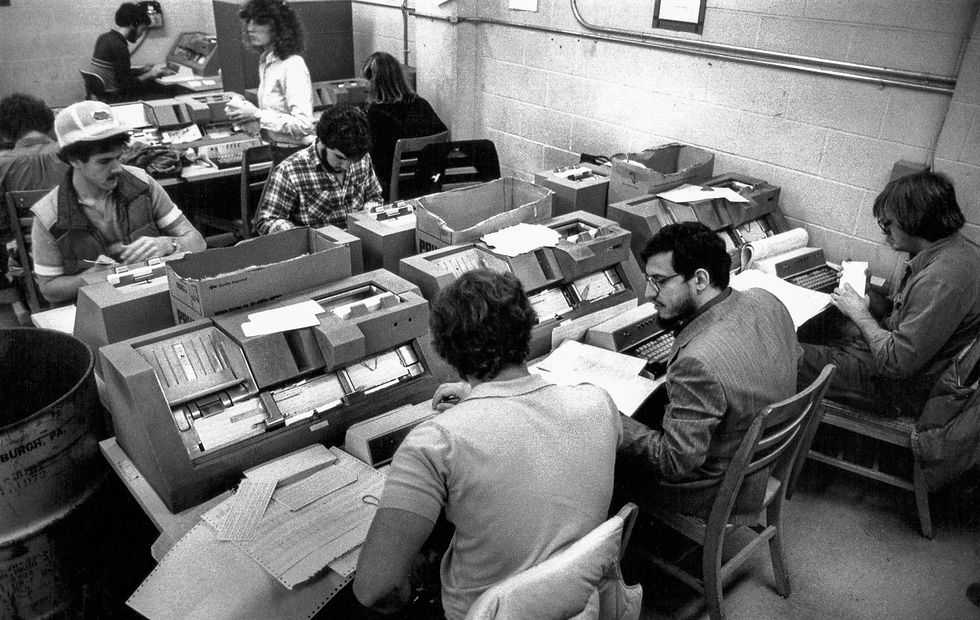

Programming with cards

Then, as now, even a single letter wrong in a program is usually enough to make it fail. The big difference between then and now, is that the turnaround time in those days, the time to make and fix a mistake might be 10 to 30 minutes. You learned to be careful! Even so, the consumption of punch cards and paper printouts was immense.

A history of sharing

The remarkable feature of this University computer was that it was shared by so many people. Multiple programs could run at once.

As the operators loaded your program cards, they’d precede each program by a card indicating how long the program was permitted on the machine; students had a 5 second time slice. A CDC Cyber 173 mainframe could do rather a lot in 5 seconds; after all it didn’t need to drive a hi-res graphical user interface or play cat videos and TikTok.

As I write, my desktop computer, a tiny low-powered Intel NUC, has 388 programs running; quasi-simultaneously. I was always dazzled by the intricacies of context switching on my first mainframe; that IBM 370/115 computer. Running programs in parallel is like reading a book, marking your spot, laying another book on top, starting to read that, marking your spot, and doing this with hundreds of books, while switching books every few pages, and having each book also marked with the priority with which it will be chosen. These days the computers in your phone switch “books” thousands of times a second and they have more than one pair of eyes to read with.

Today’s cloud computing services are the ultimate in sharing resources. They typically run multiple virtual computers on a single piece of hardware; each being defined by a board with some electronic chips on it. A cloud service provider has one or more buildings with racks of boards, typically with failover power supplies, disks and so on. You may think you are buying access to a computer when you buy a service on the cloud, but mostly you’ll be getting a time slice. Multiple things are being shared: the building space, the power supplies, and, most importantly, the skills of the people involved.

But before the clouds, there were storms

The first competition for mainframe computers was minicomputers. They were typically fridge-sized rather than room-sized. The hype started almost immediately. Mainframes were old hat and would disappear in favour of distributed computing, where every business would have a minicomputer instead of contracting out their work to an expensive mainframe. Sound familiar?

With the rise of the personal computer (PC), beginning in 1981, minicomputer hype started to fade, only to be replaced by PC hype. Big companies still had mainframes and smaller ones may have had a minicomputer or two, but their offices soon filled up with personal computers.

Some more powerful personal computers were called workstations and ran sophisticated sharing operating systems, just like mainframes, but better.

The distributed computing hype hit a high point with the transputer in the late 1980s. These were touted as the next big wave and were designed to be deployed in communicating clusters. I was enthralled by the technology and recall thinking how antiquated mainframes and personal computers were by comparison. There were conferences and the transputer designers won awards.

Have you heard of them? Probably not. The dustbin of history is both deep and wide. I picked transputers, but there were many other contenders for the next big thing; none were.

Over the past 40 years, there was a viral spread of distributed computing, meaning almost every business, large and small, acquired its own fleet of computers.

This growth has been followed by an almost equally large contraction as businesses realised they didn’t need computers, they didn’t even want them, they wanted services.

Only geeks care about the hardware behind the services. It’s like cars. My father spent hours trying to convince my mother that she couldn’t drive without understanding how a clutch worked; in detail. She didn’t care. Similarly, nobody cares about computers except geeks.

The waste, overhead and hassle of businesses operating their own computers to provide themselves with services was something that businesses didn’t understand until they experienced the consequences of failure. Failure to do their backups properly. Failure to implement security properly. Failure to implement redundant capacity with rapid failover. Failover means the transparent replacement of a failing computer by a standby machine.

Small companies didn’t have the expertise to handle these tasks properly and even medium-sized companies floundered.

Managing distributed computer systems

Back in the 1990s, while writing scheduling and rostering software for a smallish company handling the transport scheduling for the Sydney Olympics, I was also managing the company’s mail server software; somebody had to do it.

The manual for the Sendmail software, the tool of choice at the time, was about 1,000 pages. I needed to read it cover to cover multiple times. Later editions have two or three times as many pages.

Software to manage spam was an additional burden. Mail software has long been in a complex death spiral battle against hackers; and the battle is on-going.

All over the world, small and even medium companies were realising that this stuff is really hard. Email, failover, security … they are all hard. Installing and repairing computers was a distraction from core business.

So what do you do? You purchase centralised services running on large cloud based data centres with specialists who do nothing else except manage spam, manage email, manage backups, manage authentication, manage encryption. After all, why on Earth would a scheduling software company, or any other company, want to maintain the level of expertise required for such tasks?

It’s no different with your phone. You don’t want to manage the backup and constant upgrading required to deal with hackers and the like. When your phone dies, you want the safety of knowing everything you value is safely stored on a centralised database and can be magically restored. We want the services, but it’s centralised systems that make services simple.

The return to sharing

So computing is rapidly shifting back to an industry built around large, centralised and shared resources. They are a little different from the mainframes of the 1970s, but they have many of the characteristics.

The transformation back to centralised computing isn’t yet complete; there are still large companies with their own in-house experts who don’t use cloud services. There are still small companies limping along with insecure systems which get hacked. They are frequently sent bankrupt when a “simple” hardware failure exposes the weakness of their failover or backup system.

Distributed computing has one of the world’s biggest catastrophic failures. Its costs in terms of time, money and resources have been immense.

Put simply, it was a failure to realise that sharing benefits everybody.

Could we have foreseen this and gone directly from mainframes to cloud services? Probably not. We tend to learn from making mistakes far more readily than from foreseeing them. But can we perhaps break the mould with electricity and not make the same mistakes all over again?

Decentralised energy systems

The biggest spruikers of decentralised energy systems are probably too young to remember the failure of decentralised computing. Some are a particular kind of geek and they love managing their electricity. Most of us just want to plug our stuff in and make it work; and we’d prefer not to plug it in, if possible.

I watched one-time Australian Greens leader, Richard Di Natale, being interviewed some time back, pointing at his room full of lead acid batteries. “This is the future!” he crowed. I bloody well hope not.

Here are a few gaping weaknesses of decentralised energy systems. They are all blindingly obvious:

Inefficiency. The inefficiencies are twofold, first you need more mines and more stuff. If you put panels and batteries on every household to the extent that you can disconnect them from the grid, then each household needs an extraordinary system. Even Di Natale still kept his grid connection! So you end up with two systems – a centralised one running inefficiently for the few weeks of some years when the sun supplies less than usual, plus the household system, plus the grid. And if the households contribute to the grid, then it needs to be thicker, literally, the wires need to be more robust to handle the bi-directional load. The vast expansion of transmission infrastructure is also an obvious material inefficiency. The second inefficiency is, as with computer systems, the skills problem. It’s of lesser concern so I’ll ignore it.

Security. I mean this in the geek computing sense. Imagine when every inverter on the planet can be accessed from every smart phone. Non-geeks always underestimate the difficulties of good security; even more than geeks; and geeks have gotten this spectacularly wrong for decades. Every computer system on the planet is full of buggy software written in buggy languages. When the potential losses were just those cat videos and TikTok, then who cares? But when it’s the power grid? It’s only recently the Linux kernel announced plans to support a secure language (Rust). Linux has a history of taking security much more seriously than more popular computer operating systems, so I can only imagine the mess elsewhere.

Multiple points of failure. It’s ironic, but when you have something with a single point of failure, it gets done right. If I were ever in Taiwan during a massive earthquake, I'd hope to be on the 101st floor of Taipei 101; one of the tallest buildings on the planet. It’s designed to withstand quakes; whereas nobody bothers with this kind of robust engineering in small buildings. Where was the safest place to be during Japan's 2011 tsunami on the north east coast? At any of the nuclear reactors, of course. Nobody bothered building really big seawalls to protect lesser investments, let alone people, anywhere else along that coast, and thousands died as a result. But the reactors, as multibillion dollar investments, had impressive seawalls. Definitely not perfect, as it happens, but they still saved lives. The staff at all of the reactors along that coast survived while many of those without the protection of those seawalls died.

So, please next time you hear somebody talking about power plants as centralised dinosaurs, then you might like to remind them of the decentralised failure of your choosing; cars and computer systems are my two favourites.

If you're looking for a fun and exciting way to unwind https://onlinecasinoaustralia.online/casino/casino4u/ , look no further than online casinos in Australia. With a plethora of pokies to choose from and generous bonus programs, you can't go wrong.